Posts from — April 2010

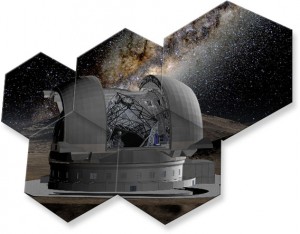

Why Chile will host the Extremely Large Telescope

image courtesy eso

The European Southern Observatory (ESO) has chosen Chile as the site for it’s next generation telescope: the 42-metre E-ELT (European Extremely Large Telescope).

The E-ELT will be built on Cerro Armazones, a 3060-metre-high mountain, about 20 kilometres from Cerro Paranal, which hosts ESO’s Very Large Telescope.

These mountains are about a 2 hour drive from the coastal town of Antofagasta. The reasons why ESO chose Cerro Paranal are exactly why they chose Cerro Armazones. There are some unique climactic conditions that make the region great for astronomy.

These mountains are in the Atacama Desert, one of the driest regions of the world. It lies on the leeward side of the Andes, which act as a formidable barrier to the moisture-laden winds from the east. Some parts of the desert haven’t seen rain for years; some haven’t seen any in recent memory. Couple the dryness with the desert’s high altitude and the stillness of the air above it and you have some of the best “seeing,” as astronomers say, in the world. There’s little of the atmospheric turbulence that haunts even the best of telescopes.

Cerro Paranal is a 2,635-meter-high mountain in the Atacama Desert, about 120 kilometers south of Antofagasta and barely 12 kilometers inland from the Pacific coast. Despite a clear line of sight, the ocean is rarely seen from the mountain, as it is often obscured by a thick layer of clouds, the kind you would normally see from an airplane at 40,000 feet. These low-lying clouds are exactly what make Cerro Paranal (and hence Cerro Armazones) a perfect spot for astronomy. The cold Humboldt Current, flowing northward along the Chilean coast, creates a strong inversion layer, pulling the clouds down to well below the summit of Paranal, not just reducing the moisture in the air around its telescopes but also creating nearly 350 days of clear skies. The dry air above Paranal—low in water vapor—allows light from outer space to reach the telescopes without being absorbed by the atmosphere. Nowhere else on Earth do these climatic features—a high-altitude desert and an ocean-induced inversion layer—come together as they do in the Atacama.

Here are some pictures of a trip to Chile to see the Very Large Telescope.

April 26, 2023 2 Comments

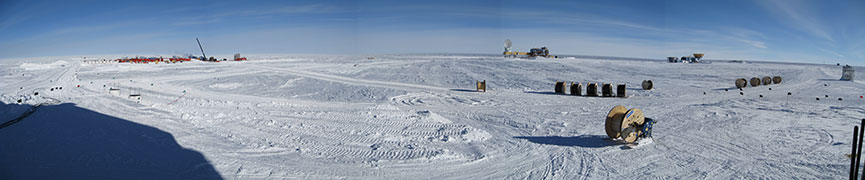

Moonshadow: IceCube raps to a Cat Stevens tune

IceCube drill camp

I’m being followed by a moon shadow, moon shadow-moon shadow, sang Cat Stevens. At the South Pole, the IceCube neutrino detector is singing much the same song.

IceCube is a neutrino telescope that is monitoring a cubic kilometer of ice at the South Pole for neutrino interactions. When a neutrino hits the ice, it results in a production of a charged particle called the muon. The muon streaks through the ice faster than the speed of light in ice (but not faster than the speed of ice light in vacuum – which is the absolute speed limit). This speeding muon creates a cone of blue light called Cherenkov light. By detecting this Cherenkov light, IceCube (and indeed many other neutrino detectors) can figure out the direction of the original neutrino.

But muons in the ice are coming not just from neutrino interactions. When cosmic rays hit the Earth’s atmosphere, they also generate muons, and these muons can also create Cherenkov light in the ice. It’s these cosmic ray-muons that are the source of a moon shadow. The moon blocks some of the cosmic rays reaching the Earth. When IceCube studies the entire sky for cosmic ray-muons, it sees a deficit of such muons coming from the direction of the moon. This is the moon’s shadow!

The moon’s shadow was first proposed in 1957.

IceCube is made of 80 strings of digital optical modules (DOMs), with each string contain 60 DOMs. Each DOM is capable of detecting the Cherenkov light emitted by a speeding muon.

In this paper, IceCube scientists report the results of 40 strings observing cosmic ray muons, operating between April 2008 and April 2009. A total of 8 lunar months’ worth of data was analysed by IceCube – and it clearly saw the shadow of the moon.

Why is the moon’s shadow important? It helps the IceCube scientists figure out that their telescope is pointing correctly. How does a bunch of strings of DOMs embedded in the ice function like a telescope that can point at a region of the sky? That’s for another blog post.

Meanwhile, here is a video I made in the IceCube lab at the South Pole in January 2008, of a display of muons streaking through the ice. First, a paragraph from The Edge of Physics describing the display:

The display is a graphic on a computer screen showing, in near real-time, the muons that are streaming through the ice. The ice is an inky black background, against which the IceCube strings are a line of dots, each dot a DOM frozen in the ice. The sky is a checkered pattern of blue lines. Every few seconds a muon comes streaking down from the sky, sometimes at an angle to the strings, sometimes straight down. The DOMs register the muon as it passes by, and they light up in one of five colors: red, orange, yellow, green, or blue. The closer a muon is to a DOM, the redder it looks; the farthest muons are blue. The greater the energy of the muon, the more the DOM flares up. I knew it was just a visual representation of what was happening kilometers beneath the surface, but it was hypnotic. Low-intensity muons caused the DOMs to light up a little, making them look like pearls. High-intensity muons caused nearby DOMs to become so big and bulbous that they overlapped and stuck together, like lumps of gummy candy. The display rotated slowly to provide a 3-D view, a psychedelic soiree of subatomic particles.

April 26, 2023 2 Comments

Hubble’s 20th anniversary: Not just pretty pictures

IT WAS TWENTY YEARS AGO, TODAY (well 24 April), that the Hubble Space Telescope was launched, making it the first optical telescope to be operated from space.

We have all been amazed and astounded by the images of galaxies and nebulae that have poured out of Hubble’s amazing vision.

But it’s important to highlight Hubble’s role in what has been the seminal discovery of the 1990s: Dark Energy.

From The Edge of Physics:

In the 1990s, two separate teams of astronomers had trained their telescopes to look deep into the nearby universe. One was led by Saul Perlmutter of Lawrence Berkeley National Laboratory and the other by Brian Schmidt of the Australian National University and Adam Riess, who was then at UC Berkeley. They were looking at individual stars in galaxies hundreds of millions, even billions, of light-years away. Normally, it’s impossible to see individual stars so far away. But these astronomers were after stars that were in their death throes—the exploding stars known as supernovae. Supernovae, in their final moments, can outshine their host galaxies and act as beacons, lighting our way to distant parts of our universe. The astronomers were hoping to confirm that the expansion of the universe was slowing down with age.

Both teams had been monitoring a special type of exploding star known as a type-Ia supernova. Such stellar explosions have become astronomy’s “standard candle,” meaning that we can determine, within limits, their absolute—that is, intrinsic—brightness and, as a result, their distance from us. This calculation is based on the relationship between how long such a supernova takes to reach peak brightness and then wane. This period can be accurately measured and is strongly correlated with the supernova’s absolute brightness. Then astronomers measure its apparent brightness—its brightness as seen from Earth—and its redshift. The apparent brightness, when compared with the absolute brightness, tells us the distance to the supernova, and its redshift is a measure of how much the universe has expanded since the supernova detonated. The idea was to gather data both from nearby and distant supernovae and then compare them to see how the expansion of the universe had changed over time.

And what they found stunned the world of physics: The expanding universe, instead of slowing down with age, or even just coasting along, is actually speeding up.

Einstein (him again) had shown that space could have an inherent energy and that this energy counters gravity. The supernova studies indicate that something akin to this is indeed happening. Some energy is countering gravity and causing the universe’s expansion to accelerate. Along with dark matter, there is now another puzzle: dark energy (sometimes referred to as the cosmological constant). Together they form the bulk of the universe.

But when the announcement was first made in 1998, there was still concern that there was something wrong with the observations. Were the supernovae appearing faint because of dust in the galaxies?

That’s when astronomers turned to the Hubble Space Telescope. Perlmutter’s team was the first to use the Hubble in 2000 to study a dozen galaxies. No dust. The results were good.

Then in 2002, the Hubble was fitted with the stunning Advanced Camera for Surveys (ACS), and a new team led by Riess found 25 more supernovae. The results were conclusive: the exploding stars were indeed fainter. The observations concluded that about 73 % of the universe is indeed made of dark energy.

April 21, 2023 No Comments

What Einstein left behind

Einstein died on 18 April, 1955. A PhysicsWorld blog post relates how a LIFE magazine photographer was sent to Princeton to cover the event. But instead of going to the hospital, where Einstein had died of heart failure, photographer Ralph Morse went to the physicist’s home with a camera and a case of scotch, offered the superintendent there some scotch, and got access to Einstein’s office. Last week LIFE published ten touching photographs from The Day Einstein Died.

April 20, 2023 No Comments

How can shipwrecks help in the search for dark matter?

The CDMS detectors

WHAT have sunken ships got to with neutrino detectors and dark matter? Well, the lead recovered from shipwrecks might turn out to be the most important metal in our attempt to understand neutrinos and dark matter.

Italy’s National Institute of Nuclear Physics (INFN) just reported that it took possession of 120 bricks of old lead from a museum in Sardinia. These bricks had been salvaged 20 years ago from a Roman ship that sank of the coast of Sardinia 2000 years ago. The INFN will be melting this lead and creating a 3 centimetre-thick lining that will surround its Cryogenic Underground Observatory for Rare Events (CUORE) detector, according to a news report in Nature.

CUORE is watching for an extremely rare event called neutrinoless double-beta decay, in which a radioactive element decays, and the decay products include two electrons, but no neutrinos (normal beta decay produces antineutrinos). Neutrinoless double-beta decay is theoretically predicted, but has never been convincingly observed. The experiment is monitoring 750 kilograms of tellurium dioxide for this rare event. The study will reveal more about the mass of neutrinos.

But the experiment can be fouled by ambient radioactivity, which can create a lot of noise in the detector. That’s why you need lead to shield the detector. However, new lead has an isotope called lead-210, which is itself radioactive, and can cause the same problems as ambient radioactivity. What’s a physicist to do?

Here’s where shipwrecks come in. In lead that has been lying on the seabed for a couple of thousand years, the lead-210 – which has a half-life of 22 years – has pretty much all decayed. Using this lead solves the problem.

CUORE, however, is not the first experiment to hit upon this idea. The Cryogenic Dark Matter Search (CDMS) experiment, being performed in the Soudan Mine in Minnesota, has been using lead from shipwrecks for a similar purpose for years now. CDMS is looking for signals from dark matter particles hitting crystals of germanium or silicon (though none have been seen yet).

Here’s a paragraph from The Edge of Physics talking about the use of lead in the CDMS experiment:

One of the biggest sources of false signals, or noise, is ambient radioactivity. Even humans are radioactive enough to be a problem. So the germanium detectors had to be shielded inside layers of copper and lead. But lead can contain lead-210, a radioactive isotope with a half-life of twenty-two years (that is, after twenty-two years, half of a given amount of it will have radioactively decayed). So the team had to find old lead, in which most if not all of the lead-210 had decayed already. An Italian colleague mentioned that he had been using lead taken from two-thousand-year-old Roman ships that had sunk off the Italian coast. The CDMS team located a company that was selling lead salvaged from a ship that had sunk off the coast of France in the eighteenth century. Unaware that they were doing anything illegal, the researchers bought the lead. The company, however, got in trouble with French customs for selling archaeological material.

April 16, 2023 3 Comments

Of black holes, neutron stars and dark matter

A neutron star in x-rays

I just wrote a story for New Scientist on how black holes could form inside neutron stars, and how this could tell us more about the nature of dark matter

Sounds bizarre? Well, even the researcher behind the work, Malcolm Fairbairn of King’s College London acknowledged: “It’s slightly crazy, but not completely crazy”.

Here’s the basic idea: neutron stars, which are the remnants of supernovae explosions and are the densest known stars, could attract particles of dark matter. If these neutron stars are in regions where the density of dark matter is very high (such as at the centre of the Milky Way), then interesting things could happen. But it all depends on the nature of dark matter.

Theoreticians’ favourite dark matter particle is the neutralino – which naturally arises from theories of supersymmetry (an extension to the standard model of particle physics). The neutralino happens to be its own anti-particle, so as a neutron star accretes neutralinos (or some such particle), the neutralinos will gather at the heart of the star and start annihilating each other. This will heat up the star.

Now, neutron stars start off very hot and cool rapidly. A neutron star that is being fuelled by dark matter would not cool down as fast. If somehow we could observe a population of neutron stars, then the distribution of hot versus cold stars could tell us whether dark matter is heating them. But there is a serious problem with trying to observe this phenomenon. This is most likely happening at the centre of the Milky Way, and our view at the relevant frequencies is obstructed by dust clouds. So, not a terribly exciting prospect.

But what if dark matter particles are not their own anti-particle. There are theories in which this is the case. Physicists are puzzled by the fact that the amount of dark matter in the universe is only about 5-6 times greater than normal matter. For cosmologists, 5-6 times greater is almost like saying the densities are equal. That’s because usually they are dealing with quantities that are orders and orders of magnitude apart. Something that is not even an order of magnitude apart is enough to require an explanation.

What if something similar happened during the creation of matter and dark matter. We know that there is an asymmetry in how much matter and antimatter were created after the big bang: a tiny bit more matter was created, leading to the universe we see, otherwise everything would have gone up in a puff of energy. Now, what if both dark matter and anti-dark matter particles were created, but again, the amount of dark matter was a smidgen more than anti-dark matter? That could lead to the observed amount of dark matter.

Such dark matter particles would not self-annihilate when they came into close proximity. So, if they started accreting inside a neutron star, they would form a dark matter star inside the neutron star. If the neutron star was in region of dense dark matter, the accretion would continue unabated. Eventually, the dark matter start would exceed its “Chandreshekar limit” – the point at which the star becomes unstable and collapses into a black hole!

[Technical Note: Such a dark matter particle would have to be fermionic; two fermions cannot occupy the same quantum state at the same time]

The black hole would instantaneously devour the neutron star, resulting in a burst of gamma-rays. Could such a phenomenon be the source of the mysterious gamma-ray bursts we see in the universe? Fairbairn points out that the favoured theories of GRBs involve a binary neutron star system in which the stars spiral inwards towards each other and eventually collide.

What’s to say GRBs are not the result of a black hole forming inside a neutron star?

Well, experiments, as always will have the last word.

April 14, 2023 1 Comment

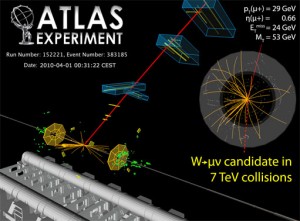

First W bosons seen at the Large Hadron Collider

image courtesy CERN and ATLAS

The ATLAS experiment has discovered the first W bosons at the Large Hadron Collider. Here’s the opening paragraph from the ATLAS newsletter reporting the discovery:

Less than a week after ATLAS saw its first high-energy collisions, two W-boson candidates were identified on Tuesday April 6th. ATLAS is the first (and so far, only) LHC experiment to have spotted Ws, one decaying to an electron and a neutrino, and the other to a muon and a neutrino.

So, what’s so great about seeing W bosons? After all, they have already been seen and studied. Well, as Fabiola Gianotti, the spokesperson of ATLAS says (quoted in The Edge of Physics): “The signal of today becomes a calibration tool of tomorrow”. Here’s the paragraph from The Edge of Physics that puts this idea in perspective:

Before beginning its search for new physics, however, ATLAS will have to confirm what other detectors have found. The Tevatron collider and its detectors at Fermilab, near Chicago, have established to considerable precision the masses of the W boson and the top quark (which is one of the six types of quarks, and the heaviest), while the LEP did the same for the Z boson. ATLAS will have to find them, and not just that—the physicists will have to use these particles to fine-tune their new detectors, making sure they are working as designed. The top quark, discovered at Fermilab in 1995, is one of the particles physicists will use to calibrate ATLAS.

When I visited the ATLAS cavern for the research for The Edge of Physics, I was struck by the truth of this statement. The journey began in a building blandly called surface hall SX1. A wood-panelled structure designed to blend in with pastoral Switzerland, SX1’s real purpose was to camouflage the activities inside, and more importantly, beneath it. Inside this building were the two 60-metre deep shafts that provided access to the ALTAS cavern below. As soon as we entered the hall, I saw rows of giant slabs of instruments in aluminium frames, each the size of a few snooker tables. Thick cables and bundles of wires snaked around them. These were muon chambers and were being calibrated using cosmic rays.

There had been a time when cosmic rays were themselves a mystery. In the early 1930s, physicists were just beginning to decipher these high energy particles from outer space. It was becoming clear that cosmic rays, when they hit metal plates, were producing electrons and their anti-particles, positrons. Then in 1936, Caltech’s Carl Anderson and his student Seth Neddermeyer discovered a new particle using cosmic rays, but they didn’t know exactly what it was. Decades later that it became clear that they had found a muon, a heavier cousin of the electron. Now muons, once exotica, have themselves become calibration tools, as was evident in the SX1 surface hall. When cosmic rays strike the upper atmosphere, they produce particles which are unstable and decay into a shower of negatively and positively charged muons. These were smashing into the muon chambers, allowing the physicists to test that their detectors were working. Once tested, these muon chambers would be lowered into the ATLAS cavern through one of the two shafts to help assemble the detector’s giant muon detectors. Tracking muons emerging from the collision of protons will be a crucial aspect of the search for new physics.

April 13, 2023 1 Comment

Why the LHC is like an experiment in space

The coldest ring in the universe?

The Large Hadron Collider (LHC) is inside a 27-kilometre-long tunnel that is 100 metres underground, near Geneva, Switzerland. Why is that like an experiment in space?

Well, for one, the magnets of the LHC are colder than outer space. At 1.9 K, the LHC easily puts outer space (which is at 2.73 K – the temperature of the cosmic microwave background) to shame.

But more than that, it’s the fact that the magnets have to be cooled down to 1.9 K that makes the LHC more like an experiment in outer space – in the sense that if something goes wrong, you can’t just go in there and repair it. You have to wait for the machine to be warmed back up to room (or rather tunnel) temperature, before engineers can go in and do their thing.

But why is the LHC at 1.9 K? For a given tunnel, the more energetic the particles, the more powerful the magnets need to be. The LHC was designed to fit into the tunnel that had previously housed the much less powerful Large Electron Positron (LEP) collider, which at its peak operated at an energy of 209 giga-electronvolts (GeV). The LHC is designed for 14 tera-electronvolts (TeV). It needed the next generation of superconducting coils, both for so-called radio frequency cavities used to accelerate protons around the tunnel and for the supremely powerful magnets needed to bend its high energy proton beams around the tunnel’s tight curve. The RF cavities and the magnets had to be compact to fit into the small-bore tunnel, and hence had to carry extremely high currents for their size.

The designers turned to coils made of niobium-titanium, the only ones that could be made in the industrial quantities required by the LHC. But generating the extra-strong magnetic fields for the machine meant cooling the coils down to 1.9 K rather than 4.5 K (the rather more easily-reached temperature of liquid helium), so that they could carry much more current. This, however, came at a price. At that temperature, liquid helium acts as a superfluid, with weird quantum properties. It has zero viscosity and can slip through microscopic cracks. So, the thousands and thousands of welds in the plumbing had to be at least as good as those in a nuclear plant.

It’s crucial that everything works perfectly, for repairing the LHC and its detectors once they are up and running is far from trivial. The LHC takes about 5 weeks to warm up. After repairs, the LHC’s 40,000 tonnes of magnets need to be cooled back down to 1.9 °K, a process that also takes 5 weeks, requiring nearly 10,000 tonnes of liquid nitrogen and 130 tonnes of superfluid helium.

So, it’s not really stretch to say that the LHC is like an experiment in outer space…almost.

April 12, 2023 2 Comments

Einstein’s Greatest Blunder: Was it, really?

IN an enlightening paper that appeared recently on the physics archive, physicists Carlo Rovelli and Eugenio Bianchi take on our prejudice against the so-called cosmological constant (aka dark energy), arguing that the popular notions about it are actually misconceptions.

Dark energy is the energy inherent in the fabric of spacetime, and is thought to be causing the expansion of the universe to accelerate. The equations of general relativity have a term in them (denoted by the Greek letter lambda) for the energy of spacetime.

In fact, Einstein himself drawn attention to lambda in general relativity, and was later to call it his biggest blunder. Rovelli and Bianchi, while pointing out this is indeed true, argue that the popular understanding of why Einstein so regarded lambda is erroneous.

The equations of general relativity were showing Einstein that spacetime had to be either contracting or expanding. But at the time we thought we lived in a static universe. Einstein showed how lambda could be used to get a universe that was neither expanding nor contracting. Then, faced with the evidence of an expanding universe from Edwin Hubble’s observations with the 100-inch telescope atop Mount Wilson in California, Einstein retracted.

But did he call lambda his biggest blunder because it spoiled the beauty of his equations? Or was the great man acknowledging a unique mistake in a glittering career? Nothing of the sort, say Rovelli and Bianchi.

Between 1912 and 1915, Einstein had published all sort of wrong equations for the gravitational field – not to mention articles that are mathematically wrong, as Einstein himself later conceded. He later corrected his wrong equations and wrong conclusions, and never thought that a physically wrong published equation should be thought as a ‘great blunder’.

So, why this emphasis on the cosmological constant as his “biggest blunder”, if he never really considered other mistakes as blunders, but just part of the process of doing science?

The reason, say Rovelli and Bianchi, is that Einstein had been “spectacularly stupid” in his otherwise glorious early work in cosmology. Had he been courageous enough to face up to his own equations, he could have predicted a contracting or expanding universe, well before Hubble actually discovered galaxies that were racing away from us.

The man who had the courage to tell everybody that their ideas on space and time had to be changed, then did not dare predicting an expanding universe, even if his jewel theory was saying so.

But more importantly, Einstein did not introduce lambda specifically for predicting a static universe. He had shown in a 1916 paper that the equations of general relativity are not the most general possible, and that there could be an additional term (lambda) which would make the equations even more general (this appears in the footnote of the paper, along with first usage of the term lambda).

Crucially, Einstein had to fine-tune the value of lambda in order to realize a stable and static universe. He chose lambda to be exactly equal to the energy density of matter in the universe. Physicists hate fine-tuning, preferring to derive explanations for why constants have the value they do.

The point here is that the term lambda was not Einstein’s biggest blunder. It was his misuse of it. Again, over to Rovelli and Bianchi:

[Einstein] chose to believe in the fine-tuned value…and wrote a paper claiming that his equations were compatible with a static universe! These are facts. No surprise that later he referred to all this as his ‘greatest blunder’: he had a spectacular prediction in his notebook and contrived his own theory to the point of making a mistake about stability, just to avoid making… a correct prediction! Even a total genius can be silly, at times.

April 7, 2023 No Comments

Of Toyotas, Cosmic Rays and Dark Energy

The Keck Telescopes on Mauna Kea

A recent blog post on Alan Boyle’s Cosmic Log talks about how cosmic rays could be the source of Toyota’s problems. Cosmic rays are high energy particles from outer space that constantly bombard the Earth. If they were to hit sensitive electronics, they have more than enough energy to cause problems, including flipping bits that could lead to serious errors.

How far-fetched is this?

Without really commenting on Toyota’s woes, the issue of cosmic rays affecting electronics is not new, and is certainly a concern. I first learned of it while on the summit of Mauna Kea. I was there to research the Keck Telescopes for The Edge of Physics. I was particularly interested in a spectrograph called DEIMOS (the DEep Imaging Multi-Object Spectrograph), a 12-foot-high, 20-foot-long, 8.6-ton monster.

DEIMOS is capable of taking spectra of about 140 galaxies simultaneously. I was in the Keck II control in Waimea on the Big Island, watching astronomer Sandy Faber and her graduate student putting DEIMOS through its paces.

What intrigued me was that they were taking three “science” exposures for every patch of sky they were observing. I was told that it was to deal with any accidental flipping of bits by cosmic rays. DEIMOS uses a gigantic CCD array to capture the data, and if any pixel were to be hit by a cosmic ray it could momentarily brighten it. Now, when you are gathering faint light from galaxies billions of light years away (sometimes a single photon at a time), a cosmic ray hit could more than mess up the data. Here’s a paragraph from The Edge of Physics that talks about this issue:

Meanwhile, DEIMOS’s flexure compensation system was acting up. Faber talked about the spectrograph as if it were alive:“It’s gotten completely deranged,” she said. A few frantic moments later, everything was fixed, and DEIMOS was ready for use. The telescope, too, was pointing correctly. Faber set DEIMOS in motion with a few clicks of a computer mouse. Somewhere up on the summit, inside the Keck II dome, a slitmask moved into position. Once the telescope was locked and tracking, DEIMOS started moving with it. An image of the sky as seen by DEIMOS appeared on the computer screen in Waimea. Four known stars could be seen in square holes at the edges of the slitmask. These were the alignment stars, and they were slightly off-center. Yan sent commands to the telescope, fine-tuning its position, and checked again. The alignment was better, but not perfect. One more command. A less experienced team might have checked again to see if the stars were centered in their boxes, but Faber and Yan moved on to observing the galaxies. And sure enough, not just the four alignment stars but each one of the nearly 140 galaxies had lined up with their respective slits. Inside DEIMOS, a grating moved into place. The light from each galaxy was now being split into a spectrum. Three exposures followed. Taking three spectra meant that for each pixel they would have three readings. If any pixel were hit by a cosmic ray, making it brighter than usual, it would be discarded (a cosmic ray is unlikely to hit the same pixel twice in three exposures), and the average of the two dimmer readings would be used.

Well, what has all this go to do with Dark Energy? The DEIMOS spectrograph is being used to survey 50,000 distant galaxies. Their spectra will be used to figure out their distance and velocity, which then can be used to study how the expansion of the universe has changed with time. This, in turn, will lead to a better understanding of Dark Energy – the energy of spacetime that is thought to be causing the expansion of the universe to accelerate.

April 3, 2023 3 Comments